In April of this year, Cerebras released a new version of their wafer scale chip, or, as they call it, Wafer Scale Engine (WSE). The first WSE, released in 2019, featured 1.2 trillion transistors, 400,000 compute cores and 18GB of on-chip memory. The new chip, WSE 2, boasts 2.6 trillion transistors and 850,000 ‘AI optimized’ cores, all on a chip the size of a vinyl record.

Cerebras developed the chip to meet the growing need for AI compute power. While Moore’s Law long ago established that processor speed would double every eighteen months, the speed of AI processing now doubles every three and a half. Handling these speeds requires the most powerful chips ever designed.

AI compute power requires actual electrical power. Not surprisingly, though somewhat astonishingly, the WSE 2 draws 23 kW of power. To put this in perspective, the most powerful GPUs “only” draw around 450W. Since the energy any chip uses gets turned into heat, and excess heat can lead to thermal throttling of GPU performance, thermal management becomes critical with these devices. Whether for PC gaming or when running GPU clusters, there is therefore significant overhead devoted to bulky cooling devices, including heat sinks, fans and liquid cooling systems.

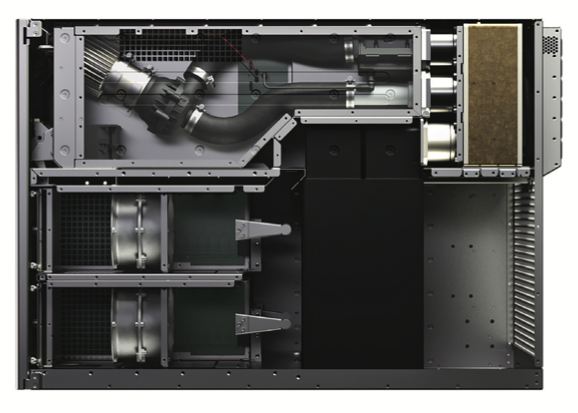

A machine using 57 times as much power as an ordinary GPU likewise has monumental cooling needs. As Cerebras describes it in their white paper, Achieving Industry Best AI Performance Through A Systems Approach, they solved this problem with internal water cooling, “like a giant gaming PC.” This set-up utilizes tubes, pumps, fans and a heat exchanger, all of which take up significant space. Indeed, when you look at the device housing the WSE 2, you will see that it is primarily devoted to cooling.

In other words, although the chip is only 21.6 cm2, and wafer thin, its housing takes up 15RU or about a third of a standard rack.

Hitting a Thermal Ceiling

The heroic efforts taken by the engineers at Cerebras to cool their innovative and impressive chip, while entirely justified, point to an emerging challenge: We are reaching a thermal management limit with many of the most common cooling methods when it comes to more and more powerful processors. For example, most air cooling in data centers can only handle about 10kW to 25kW per rack. That means, even though you could fit three of these in a rack, air could perhaps only handle one in most air cooled data centers.

Of course, the limitations of air cooling won’t be news to anyone. The thing is, even if you employed direct-to-chip cooling, spatial issues aside, you would hit a limit around 100kW per rack. While this method could handle a rack of these processors, if AI power is doubling every quarter, as mentioned above, even this approach would have limitations within two generations.

One might think that these thermal challenges could be addressed by working with an array of chips, each with lower power requirements. While these architectures may help you on the thermal management side, connecting chips in this way introduces speed bumps. There is currently no way around the fact that it is more efficient to have everything on one chip.

Breaking Through with Immersion Cooling

We developed our 2-phase immersion cooling system to address these issues at the data center level. In our early projects, which involved cryptocurrency mining, we were faced with the need to increase cooling efficiency while drastically reducing the footprint of the cooling technology itself. Our solutions fulfilled this objective perfectly.

As it turns out, our technology is also well-suited to help chip designers seeking to create increasingly powerful chips without the need to simultaneously create complicated and cumbersome cooling mechanisms for them. We have already demonstrated, for example, that we can cool 20 NVIDIA Tesla GPUs and INTEL XEON CPUs within only 3RU – a size of only 20% of the 15RU Cerebras unit.

And the possibilities are game changing. LiquidStack’s DataTank™ Flat Racks are modular immersion cooling tanks with standard capacities of 200-240kW. For extremely demanding applications, DataTanks can be equipped with high performance condensers of up to 500kW per flat rack DataTank. Entire racks of Cerebras chips, like discs in a jukebox, can be submerged and cooled, enabling a staggering amount of compute power.

Thermal engineering is already an integral part of chip design. Unfortunately, because designers need to design in terms of existing cooling technology, the limitations of that technology place real limits on what can be designed. I believe that, as chip designers become more aware of the capabilities of immersion cooling, it will in turn transform their approach to chip design.

Frankly, this transformation will be unavoidable if we want to continue advancing in the fields of AI, HPC and edge computing. The good news is, two phase immersion cooling is already enabling this transformation.